CAT Quant : Probability – Important Concepts and Formulas

Basics

- Sample Space – The sample space (S) is the set of all possible outcomes of an experiment.

- Event – An event (E) is a subset of the sample space.

- Independent Events: Events are independent if the occurrence of one does not affect the occurrence of the other.

- Dependent Events: Events are dependent if the occurrence of one affects (partially or totally) the occurrence of the other.

- Mutually Exclusive Events: Events are mutually exclusive if the occurrence of any one of them prevents the occurrence of all others.

- Complementary (Mutually Non Exclusive) Events: The events which are not mutually exclusive are known as compatible or mutually non-exclusive events.

- Collectively Exhaustive Events – If there are a set of events such that at least one of them is bound to occur, the events are said to be collectively exhaustive.

- Exhaustive number of Cases – The total number of possible outcomes of a random experiment is known as the exhaustive number of cases.

- Favorable number of Cases – The favorable number of cases refers to the number of outcomes in an experiment or event that are considered favorable or desirable, the occurrence of which ensures the happening of an event.

Probability

- Probability is the measure of the likelihood that an event will occur. It is expressed as a number between 0 and 1, where 0 indicates impossibility and 1 indicates certainty.

- Probability of an event E, denoted by P(E), is given by the ratio of the number of favorable outcomes to the total number of outcomes in the sample space. $$P(E) = \frac{m}{n =}\frac{Number \ of \ outcomes \ Favorable}{Total \ Number \ of \ Outcomes}$$

- If an event is certain to happen then “m=n” , thus P(A) = 1.

- If an event is impossible to happen then “m=0” , thus P(E) = 0.

- The complement of an event is the non-occurrence of an event, denoted by \( P(\overline{E}) \) , and given by $$P(\overline{E}) = {\LARGE[} 1 – \frac{m}{n} {\LARGE]} = [ 1 – P(E) ] \\ \therefore , P(E) + P(\overline{E}) = 1$$

- Important Notations – For two events A and B –

- \( A’ \ or \ \overline{A} \) stands for the non-occurrence or negation of A.

- \( A \cup B \) stands for the occurrence of at least one of A and B.

- \( A \cap B \)stands for the simultaneous occurrence of A and B.

- \(A’ \cap B’ \) stands for the non-occurrence of both A and B.

- \( A \subseteq B \) stands for “the occurrence of A implies occurrence of B”.

- “Odds in Favor” and “Odds Against” an Event – let, Number of favorable outcomes = a ; Number of unfavorable outcomes = b ; then

- $$Odds \ in \ favor \ of \ an \ event \ E =\frac{Number \ of \ Favorable \ Cases}{Number \ of \ Unfavorable \ Cases} = \frac{a}{b} = \frac{P(E)}{P(\overline{E})} $$

- $$Odds \ against \ an \ event \ E = \frac{Number \ of \ Unfavorable \ Cases}{Number \ of \ Favorable \ Cases} = \frac{b}{a} = \frac{P{\overline{E}}}{P(E)}$$

- $$Probability \ of \ an \ event \ P(E) =\frac{a}{a+b} = = \frac{Number \ of \ Favorable \ Cases}{Total \ number \ of \ Outcomes} $$

- Problems based on Combination and Permutation –

- Problems based on combination or selection : To solve such kind of problems, we use \( ^{n}C_r = \frac{n!}{(r!(n-r)!)} \)

- Problems based on permutation or arrangement : To solve such kind of problems, we use \( ^{n}P_r = \frac{n!}{(r!(n – r)!)} \)

- Addition Theorem of Probability –

- If there are two events A and B – $$ P(A \cup B) = P(A) + P(B) – P(A \cap B) $$

Mutually Exclusive Events

- If A and B are mutually exclusive events, then $$ \\ n(A \cap B) = 0 \ \Longrightarrow \ P(A \cap B) = 0 \\ P(A \cup B) = P(A) + P(B) $$

- For any three events A, B, C which are mutually exclusive, $$ P(A \cap B) = P(B \cap C) = P(C \cap A) = P(A \cap B \cap C) = 0 \\ \therefore \ P(A \cup C) = P(A) + P(B) + P(C) $$

- The probability of happening of any one of several mutually exclusive events is equal to the sum of their probabilities, i.e. if A1, A2, ….. An are mutually exclusive events, then, $$ P(A_1 + A_2 + … + A_n) = P(A_1) + P(A_2) + …. + P(A_n) \ \Longrightarrow \ Ρ(Σ Α₁) = ΣΡ(Α) $$

Mutually Non Exclusive Events

- If A and B are two events which are not mutually exclusive, then $$P(A \cup B) = P(A) + P(B) – P(A ∩ B) $$ $$ or \ \ P(A + B) = P(A) + P(B) – P(AB) $$

- For any three events A, B, C which are mutually non exclusive, $$ P(A \cup B \cup C) = P(A) + P(B) + P(C) – P(A \cap B) – P(B \cap C) – P(C \cap A) + P(A \cap B \cap C) $$ $$ or \ \ P(A + B + C) = P(A) + P(B) + P(C) – P(AB) – P(BC) – P(CA) + P(ABC) $$

Independent Events

- If A and B are independent events, then $$ P(A \cap B) = P(A).P(B) $$ $$ P(A \cup B) = P(A) + P(B) – P(A).P(B)$$

Conditional Probability

- Let A and B be two events associated with a random experiment. Then, the probability of occurrence of A under the condition that B has already occurred and P(B) ≠ 0, is called the conditional probability and it is denoted by \( P {\LARGE(}\frac{A}{B} {\LARGE)} \)

- Thus, \( P {\LARGE(} \frac{A}{B} {\LARGE)}\) = Probability of occurrence of A, given that B has already happened. $$\frac{P(A \cap B)}{P(B)} = \frac{n(A \cap B)}{n(B)} $$

- Sometimes, \( P {\LARGE(} \frac{A}{B} {\LARGE)}\) is also used to denote the probability of occurrence of A when B occurs. Similarly,\( P {\LARGE(} \frac{A}{B} {\LARGE)}\) is used to denote the probability of occurrence of B when A occurs.

Multiplication Theorems on Probability

- If A and B are two events associated with a random experiment, then $$P(A \cap B) = P(A). P{\LARGE(} \frac{B}{A} {\LARGE)} \ \longrightarrow \ If \ P(A) \neq 0 $$ $$or \ \ P(A \cap B) = P(B).P{\LARGE(} \frac{A}{B} {\LARGE)} \ \longrightarrow If \ P(B) \neq 0 $$

- Multiplication theorems for independent events –

- If A and B are independent events associated with a random experiment, then $$P(A \cap B) = P(A).P(B)$$ i.e., the probability of simultaneous occurrence of two independent events is equal to the product of their probabilities. By multiplication theorem, we have \( P(A \cap B) = P(A).P{\LARGE(} \frac{B}{A} {\LARGE)} \) . Since A and B are independent events, therefore, \( P{\LARGE(} \frac{B}{A} {\LARGE)} = P(B) \) $$ Hence \ , \ P(A \cap B) = P(A).P(B)$$

- Probability of at least one of the n independent events –

- If P1, P2, P3, … Pn are the probabilities of happening of n independent events A1, A2, A3, … An respectively, then

- Probability of none of them happening is – $$= (1-p_1) (1-p_2) (1-р_3) \ … \ (1 – p_n)$$

- Probability of at least one of them happening is – $$ = 1 – [ (1-p_1) (1-p_2) (1-р_3) \ … \ (1 – p_n)] $$

- Probability of happening of first event and not happening of the remaining is – $$ = p_1 [(1-p_2) (1-р_3) \ … \ (1 – p_n)] $$

- If P1, P2, P3, … Pn are the probabilities of happening of n independent events A1, A2, A3, … An respectively, then

Bayes’ Theorem

- Let A1, A2, … An be mutually exclusive and collectively exhaustive events with respective probabilities of p1, p2, … pn. Let B be an event such that P(B) \( \neq \) 0 and P \({\LARGE(} \frac{B}{A_i} {\LARGE)}\) for i = 1 to n be q1, q2, … qn. Then the conditional probability of Ai given B is $$\frac{p_iq_i}{p_1q_1 + p_2q_2 + … + p_nq_n}$$

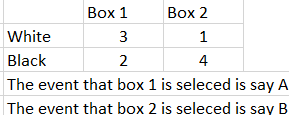

- For Example – Box 1 contains 3 white and 2 black balls . Box 2 contains 1 white and 4 black balls. A ball is picked from one of the two boxes. It turns out to be black. Find the probability that it was drawn from box 1. \( \\ \) The data is tabulated below \( \\ \)

\( \\ \) \( \\ \) \( \\ p_1 = P(A) = \frac{1}{2} \ \ and \ \ p_2 = P(B) = \frac{1}{2} \\ \) Let C be the event that a black ball is selected \( \\ \therefore \ P{\LARGE(} \frac{C}{A} {\LARGE)} = \frac{2}{5} \ and \ P{\LARGE(} \frac{C}{B} {\LARGE)} = \frac{4}{5} \\ \) Now it is given that a black ball has been drawn and we need to find P(A) i.e., we need P \( {\LARGE(} \frac{A}{C} {\LARGE)} \). We use the result above. \( \\ \\ P{\LARGE(} \frac{A}{C} {\LARGE)} = \frac{P(A) \ . \ P{\LARGE(} \frac{C}{A} {\LARGE)}}{P(A) \ . \ P{\LARGE(} \frac{C}{A} {\LARGE)} + P(B) \ . \ P{\LARGE(} \frac{C}{B} {\LARGE)}} = \frac{{\LARGE(} \frac{1}{2} {\LARGE)}{\LARGE(} \frac{2}{5} {\LARGE)}}{{\LARGE(} \frac{1}{2} {\LARGE)}{\LARGE(} \frac{2}{5} {\LARGE)} + {\LARGE(} \frac{1}{2} {\LARGE)}({\LARGE(} \frac{2}{5} {\LARGE)}} = \frac{2}{2+4} = \frac{1}{3} \)

\( \\ \) \( \\ \) \( \\ p_1 = P(A) = \frac{1}{2} \ \ and \ \ p_2 = P(B) = \frac{1}{2} \\ \) Let C be the event that a black ball is selected \( \\ \therefore \ P{\LARGE(} \frac{C}{A} {\LARGE)} = \frac{2}{5} \ and \ P{\LARGE(} \frac{C}{B} {\LARGE)} = \frac{4}{5} \\ \) Now it is given that a black ball has been drawn and we need to find P(A) i.e., we need P \( {\LARGE(} \frac{A}{C} {\LARGE)} \). We use the result above. \( \\ \\ P{\LARGE(} \frac{A}{C} {\LARGE)} = \frac{P(A) \ . \ P{\LARGE(} \frac{C}{A} {\LARGE)}}{P(A) \ . \ P{\LARGE(} \frac{C}{A} {\LARGE)} + P(B) \ . \ P{\LARGE(} \frac{C}{B} {\LARGE)}} = \frac{{\LARGE(} \frac{1}{2} {\LARGE)}{\LARGE(} \frac{2}{5} {\LARGE)}}{{\LARGE(} \frac{1}{2} {\LARGE)}{\LARGE(} \frac{2}{5} {\LARGE)} + {\LARGE(} \frac{1}{2} {\LARGE)}({\LARGE(} \frac{2}{5} {\LARGE)}} = \frac{2}{2+4} = \frac{1}{3} \)

Expected Value

- Expected value in probability theory is the anticipated average outcome of a random variable.

- It can be calculated without the number of outcomes coming into the picture. Once the events are defined, we should have the possibilities of all the events and the monetary value associated with each event (i.e., how much money is earned or given away if the particular event occurs). Then $$ Expected \ Value = ΣP_iA_i $$ where , P = probability of event E and A = Monetary Value associated with event E

Binomial Probability

- Binomial probability refers to likelihood of achieving a certain number of successes in a series of independent trials, each with the same probability of success.

- If an experiment is repeated n times under similar conditions, where p = probability of success in each trial and q = probability of failure in each trial –

- The probability of getting success exactly “r” times out of “n” $$ \ = \ ^{n}C_r(p)^{r}(q)^{n-r} $$

Read concepts and formulas for: Profit & Loss

Read more about AI practice Platform here: https://www.learntheta.com/cat-quant/